如果您使用 BigQuery“每天更新”导出功能,则可能会看到某些 traffic_source 字段显示 Data Not Available。本指南可帮助您在缺失的流量来源数据可用后(通常在每天凌晨 5 点之前),自动回填现有导出数据中的大部分缺失数据。

自动回填的步骤如下:

- 监听来自 BigQuery 的每日完整性信号。

- 在 BigQuery 导出内容中找出缺少流量来源数据的事件。

- 从 Google Ads 中查询这些事件的完整数据。

- 将完整事件数据与 BigQuery Export 联接。

创建 Pub/Sub 主题

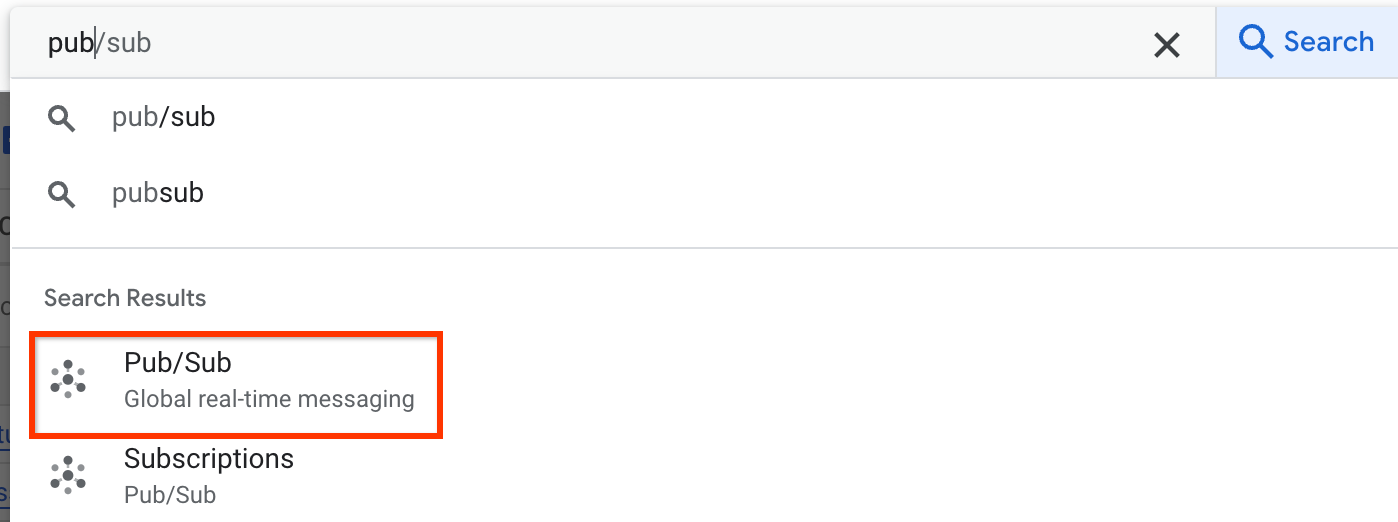

- 在 Google Cloud 控制台的左侧导航菜单中,打开 Pub/Sub。如果您没有看到 Pub/Sub,请在 Google Cloud 控制台搜索栏中搜索:

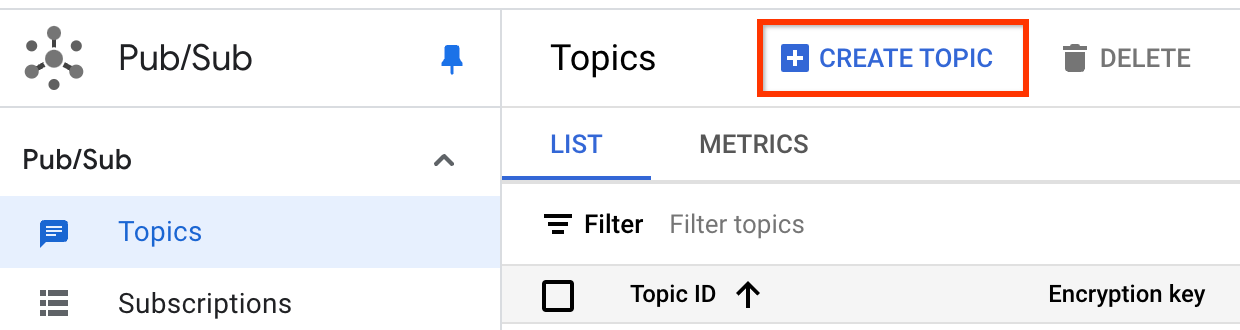

- 点击主题标签页中的 + 创建主题:

- 在主题 ID 字段中输入名称。

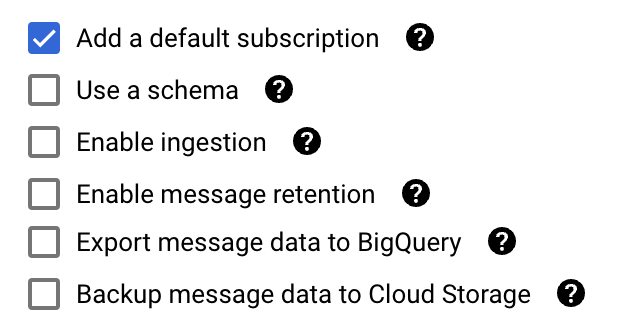

- 选择添加默认订阅,其他选项留空:

- 点击创建。

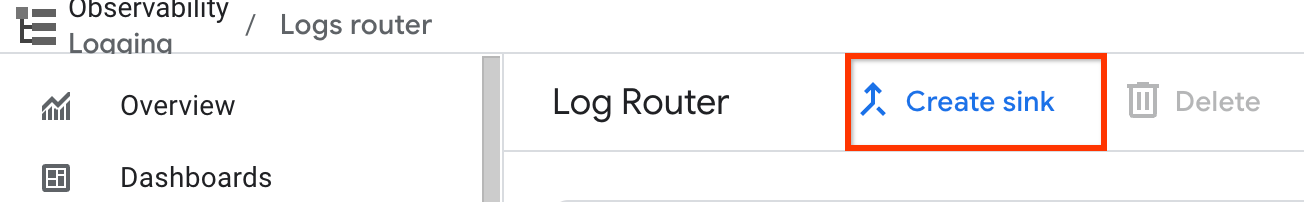

创建日志路由器接收器

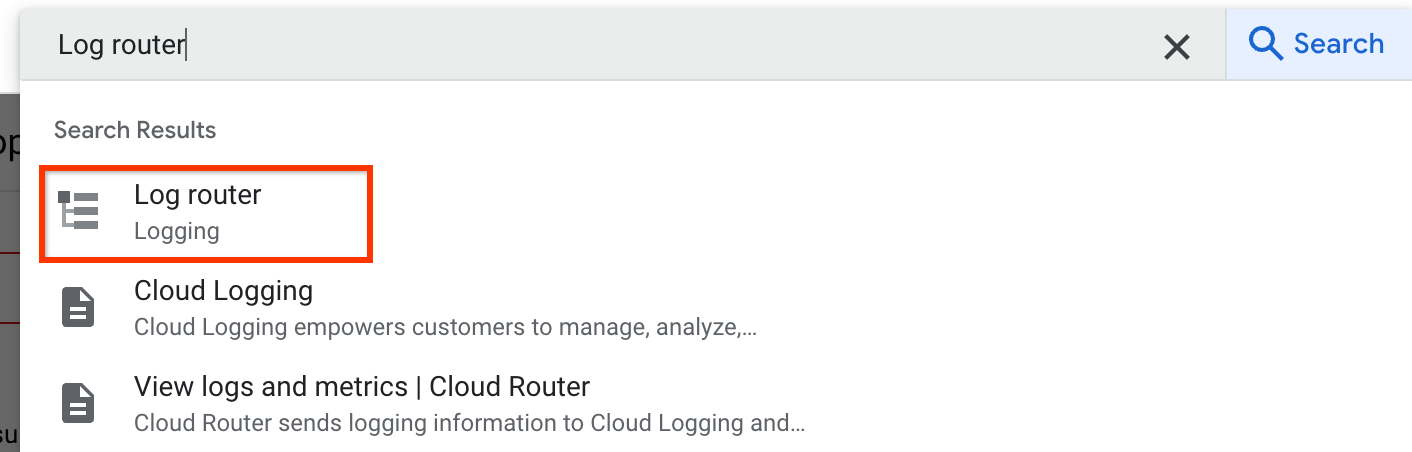

- 在 Google Cloud 控制台中打开日志路由器:

- 点击创建接收器:

- 为接收器输入名称和说明,然后点击下一步。

- 选择 Cloud Pub/Sub 主题作为接收器服务。

- 选择您创建的主题,然后点击下一步。

在构建包含项过滤条件中输入以下代码:

logName="projects/YOUR-PROJECT-ID/logs/analyticsdata.googleapis.com%2Ffresh_bigquery_export_status"将 YOUR-PROJECT-ID 替换为您的 Google Cloud 控制台项目的 ID。

点击下一步,然后点击创建接收器。您无需滤除任何日志。

验证接收器是否已列在日志路由器接收器下。

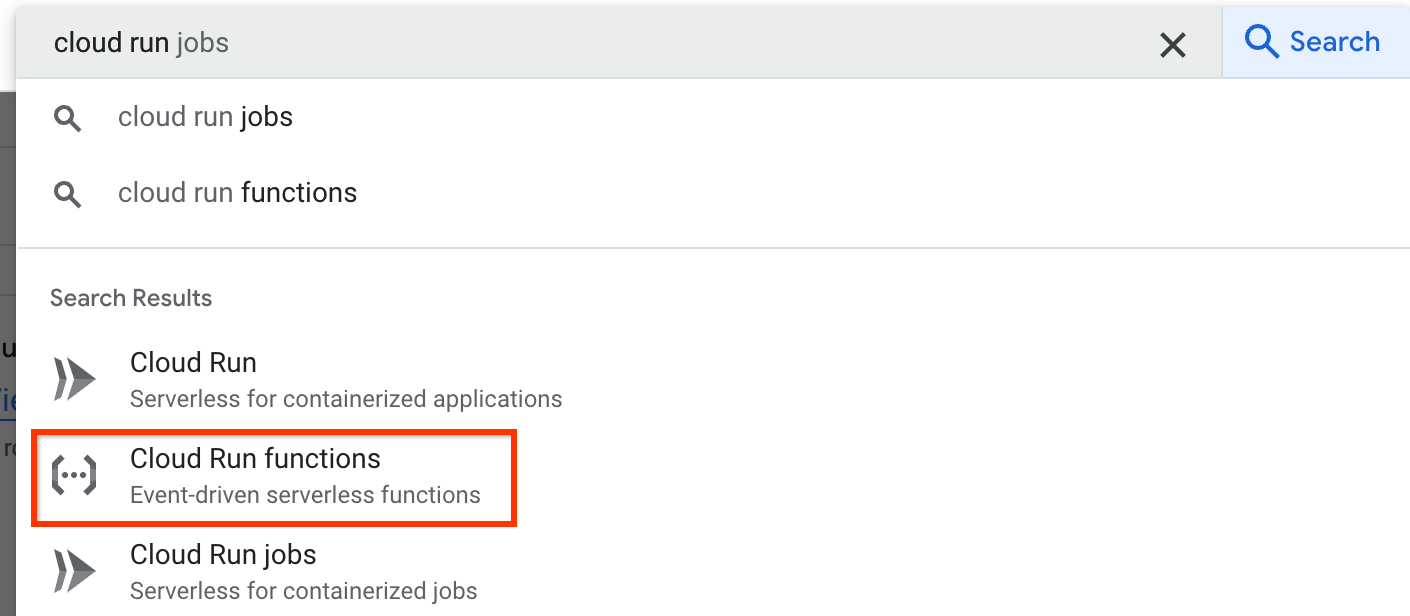

联接缺失的数据

使用 Cloud Run 函数在 Pub/Sub 检测到完整性信号时自动执行代码,以回填流量来源数据:

- 打开 Cloud Run 函数:

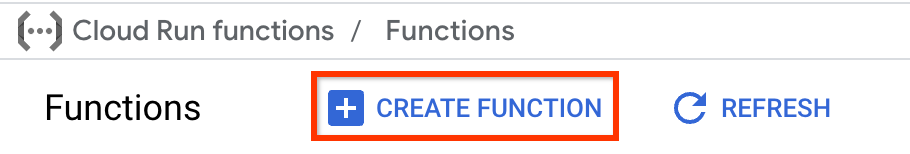

- 点击创建函数:

- 为环境选择 Cloud Run 函数。

- 为函数输入一个名称。

- 选择 Cloud Pub/Sub 作为触发器类型,并选择您创建的主题作为 Cloud Pub/Sub 主题。

- 点击下一步,然后在相应框中输入代码,以将 Google Ads 归因数据与您的 BigQuery 导出数据联接起来。