Page Summary

-

The Depth API helps devices understand the size and shape of real objects in a scene by creating depth images to blend the virtual with the real in AR apps.

-

The Depth API can be used for various purposes in AR development, including enabling occlusion, transforming scenes, adding distance and depth of field effects, enabling user interactions with AR objects, and improving hit-tests.

-

The Depth API uses a depth-from-motion algorithm to create depth images and merges data from available hardware sensors like ToF for enhanced accuracy.

-

Depth data is most accurate when the device is half a meter to about five meters away from the real-world scene, and experiences that encourage device movement yield better results.

Platform-specific guides

Android (Kotlin/Java)

Android NDK (C)

Unity (AR Foundation)

Unreal Engine

As an AR app developer, you want to seamlessly blend the virtual with the real for your users. When a user places a virtual object in their scene, they want it to look like it belongs in the real world. If you’re building an app for users to shop for furniture, you want them to be confident that the armchair they’re about to buy will fit into their space.

The Depth API helps a device’s camera to understand the size and shape of the real objects in a scene. It creates depth images, or depth maps, thereby adding a layer of realism into your apps. You can use the information provided by a depth image to enable immersive and realistic user experiences.

Use cases for developing with the Depth API

The Depth API can power object occlusion, improved immersion, and novel interactions that enhance the realism of AR experiences. The following are some ways you can use it in your own projects. For examples of Depth in action, explore the sample scenes in the ARCore Depth Lab, which demonstrates different ways to access depth data. This Unity app is open-source on Github.

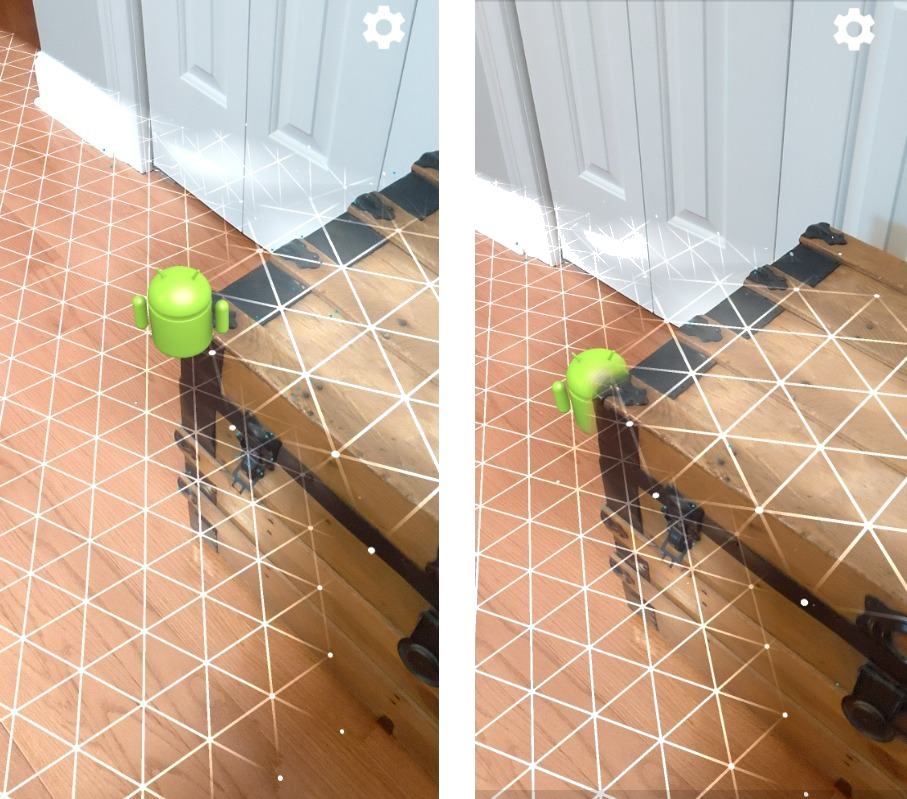

Enable occlusion

Occlusion, or accurately rendering a virtual object behind real-world objects, is paramount to an immersive AR experience. Consider a virtual Andy that a user may want to place in a scene containing a trunk beside a door. Rendered without occlusion, the Andy will unrealistically overlap with the edge of the trunk. If you use the depth of a scene and understand how far away the virtual Andy is relative to surroundings like the wooden trunk, you can accurately render the Andy with occlusion, making it appear much more realistic in its surroundings.

Transform a scene

Show your user into a new, immersive world by rendering virtual snowflakes to settle on the arms and pillows of their couches, or casting their living room in a misty fog. You can use Depth to create a scene where virtual lights interact, hide behind, and relight real objects.

Distance and depth of field

Need to show that something is far away? You can use the distance measurement and add depth-of-field effects, such as blurring out a background or foreground of a scene, with the Depth API.

Enable user interactions with AR objects

Allow users to “touch” the world through your app by enabling virtual content to interact with the real world through collision and physics. Have virtual objects go over real-world obstacles, or have virtual paintballs hit and splatter onto a real-world tree. When you combine depth-based collision with game physics, you can make an experience come to life.

Improve hit-tests

Depth can be used to improve hit-test results. Plane hit-tests only work on planar surfaces with texture, whereas depth hit-tests are more detailed and work even on non-planar and low-texture areas. This is because depth hit-tests use depth information from the scene to determine the correct depth and orientation of a point.

In the following example, the green Andys represent standard plane hit-tests and the red Andys represent depth hit-tests.

Device compatibility

The Depth API is only supported on devices with the processing power to support depth, and it must be enabled manually in ARCore, as described in Enable Depth.

Some devices may also provide a hardware depth sensor, such as a time-of-flight (ToF) sensor. Refer to the ARCore supported devices page for an up-to-date list of devices that support the Depth API and a list of devices that have a supported hardware depth sensor, such as a ToF sensor.

Depth images

The Depth API uses a depth-from-motion algorithm to create depth images, which give a 3D view of the world. Each pixel in a depth image is associated with a measurement of how far the scene is from the camera. This algorithm takes multiple device images from different angles and compares them to estimate the distance to every pixel as a user moves their phone. It selectively uses machine learning to increase depth processing, even with minimal motion from a user. It also takes advantage of any additional hardware a user’s device might have. If the device has a dedicated depth sensor, such as ToF, the algorithm automatically merges data from all available sources. This enhances the existing depth image and enables depth even when the camera is not moving. It also provides better depth on surfaces with few or no features, such as white walls, or in dynamic scenes with moving people or objects.

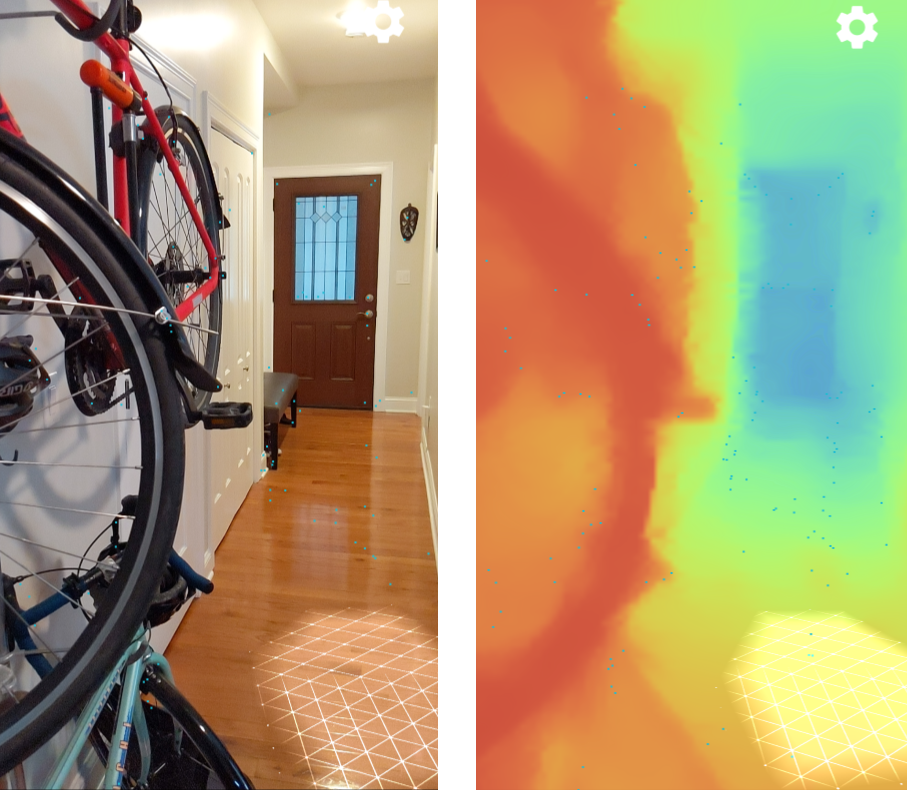

The following images show a camera image of a hallway with a bicycle on the wall, and a visualization of the depth image that is created from the camera images. Areas in red are closer to the camera, and areas in blue are farther away.

Depth from motion

Depth data becomes available when the user moves their device. The algorithm can get robust, accurate depth estimates from 0 to 65 meters away. The most accurate results come when the device is half a meter to about five meters away from the real-world scene. Experiences that encourage the user to move their device more will get better and better results.

Acquire depth images

With the Depth API, you can retrieve depth images that match every camera frame. An acquired depth image has the same timestamp and field of view intrinsics as the camera. Valid depth data are only available after the user has started moving their device around, since depth is acquired from motion. Surfaces with few or no features, such as white walls, will be associated with imprecise depth.

What’s next

- Check out the ARCore Depth Lab, which demonstrates different ways to access depth data.