效能最佳化從識別關鍵指標開始,這通常與延遲時間和處理量有關。新增可以擷取及追蹤這些指標的監控功能,也暴露了應用程式中的弱點。透過指標,就能最佳化效能指標。

此外,許多監控工具也可讓您為指標設定快訊,以便您達到特定門檻時收到通知。例如,您可以設定快訊,讓系統在失敗要求百分比增幅超過正常等級的 x% 時通知您。而 Monitoring 工具可協助您識別正常效能的情況,並找出延遲情況、錯誤數量和其他關鍵指標的異常波動。在業務重要時間範圍內,或新程式碼已推送至實際工作環境時,監控這些指標的能力尤為重要。

找出延遲指標

請務必盡可能讓 UI 保持回應速度,請留意使用者對於行動應用程式的標準更臻完善。另外也應該測量及追蹤後端服務的延遲時間,特別是因為如未勾選,可能會導致總處理量問題。

建議追蹤的指標包括:

- 要求時間長度

- 子系統精細程度的要求持續時間 (例如 API 呼叫)

- 工作時間長度

找出處理量指標

「處理量」會測量指定時間內提供的要求總數。處理量可能因子系統的延遲時間而受到影響,因此您可能需要針對延遲時間進行最佳化以改善總處理量。

以下列舉幾個建議追蹤的指標:

- 每秒查詢次數

- 每秒傳輸的資料量

- 每秒 I/O 作業數

- 資源使用率,例如 CPU 或記憶體用量

- 處理待處理工作的大小,例如 Pub/Sub 或執行緒數

不只是平均值

評估成效的常見錯誤只著重觀察平均值 (平均) 情況。雖然這很有用,但無法提供延遲分佈情形的深入分析。另一個值得追蹤的指標是效能百分位數,例如指標的第 50/75/90/99 個百分位數。

一般來說,最佳化作業可透過兩個步驟完成。首先,將延遲時間最佳化的第 90 個百分位數然後請考慮第 99 個百分位數,也稱為尾延遲,也就是需要較長時間完成的要求較小的部分。

提供詳細結果的伺服器端監控功能

伺服器端剖析通常適合用於追蹤指標。伺服器端通常更容易檢測,允許存取更精細的資料,而且較不受連線問題的擾動影響。

監控瀏覽器以端對端掌握情況

瀏覽器剖析功能可提供額外的使用者體驗深入分析。顯示哪些頁面的要求速度緩慢,然後與伺服器端監控建立關聯,以便進一步分析。

Google Analytics (分析) 可以在網頁操作時間報表中提供現成的頁面載入時間監控功能。這幾種實用的檢視方式有助於瞭解網站的使用者體驗,特別是:

- 網頁載入時間

- 重新導向載入時間

- 伺服器回應時間

在雲端監控

您可以使用許多工具擷取及監控應用程式的效能指標。舉例來說,您可以使用 Google Cloud Logging 將成效指標記錄到 Google Cloud 專案,然後在 Google Cloud Monitoring 中設定資訊主頁,以便監控及區隔記錄的指標。

請參閱 Logging 指南,以取得從 Python 用戶端程式庫的自訂攔截器記錄至 Google Cloud Logging 的範例。有了 Google Cloud 提供的這些資料,您就能在記錄資料之上建構指標,透過 Google Cloud Monitoring 掌握應用程式情況。請參閱使用者定義的記錄指標指南,使用傳送至 Google Cloud Logging 的記錄,建構指標。

或者,您可以使用 Monitoring 用戶端程式庫在程式碼中定義指標,並將指標直接傳送至 Monitoring,與記錄分開。

記錄指標範例

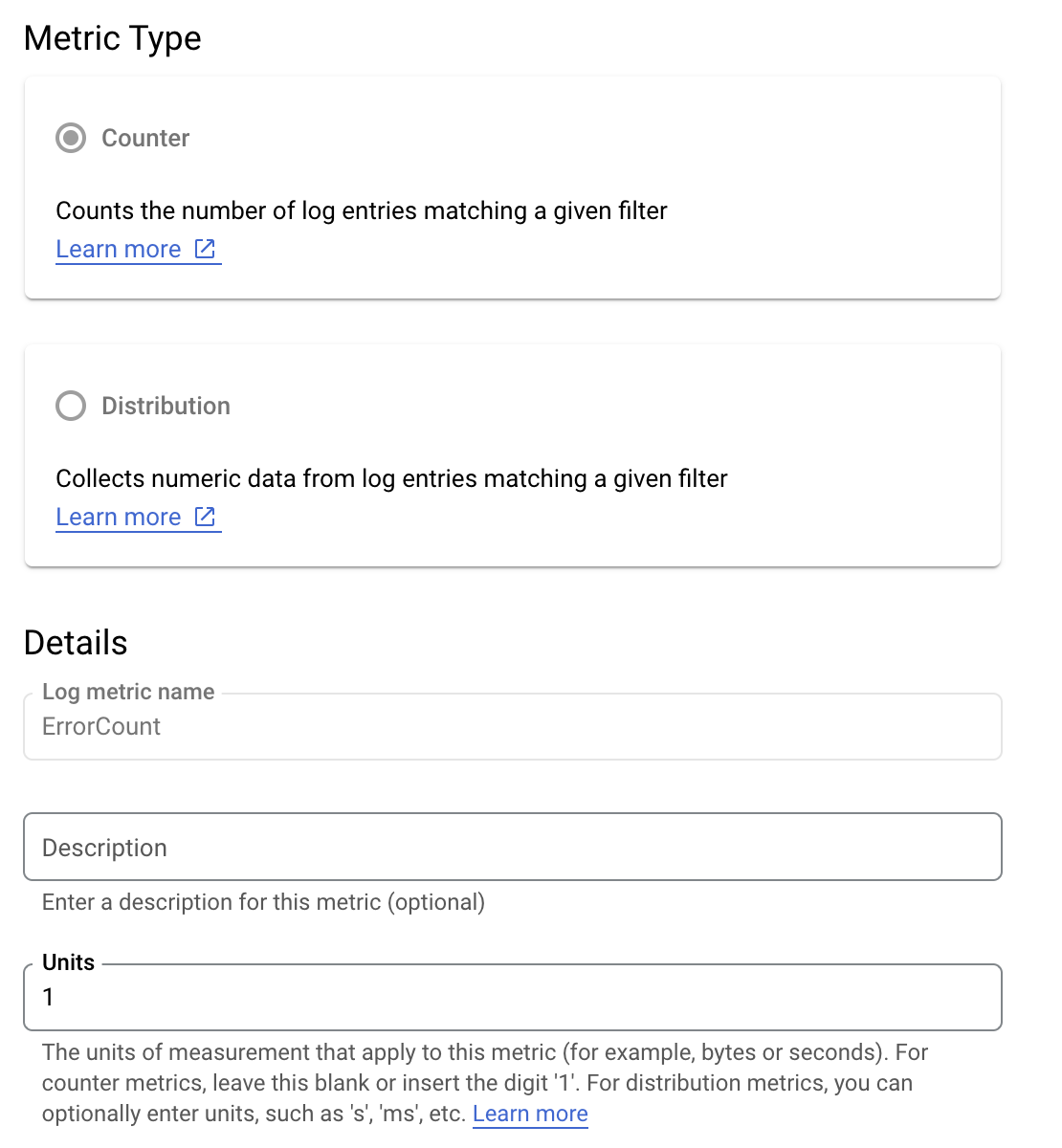

假設您要監控 is_fault 值,進一步瞭解應用程式的錯誤率。您可以將 is_fault 值從記錄檔擷取到新的計數器指標 ErrorCount。

在 Cloud Logging 中,「標籤」可讓您依據記錄中的其他資料將指標分組。您可以為傳送至 Cloud Logging 的 method 欄位設定標籤,以便查看 Google Ads API 方法如何細分錯誤數量。

設定 ErrorCount 指標和 Method 標籤後,您就可以在 Monitoring 資訊主頁中建立新的圖表,以按照 Method 分組監控 ErrorCount。

快訊

您可以在 Cloud Monitoring 和其他工具中設定快訊政策,以指定指標觸發快訊的時機和方式。如需設定 Cloud Monitoring 快訊的操作說明,請參閱快訊指南。