本页介绍了如何设置每日限额和监控正在使用的 EECU 时间,以帮助控制 Earth Engine 中的计算费用。

限制每日 EECU-time

为帮助您控制 Earth Engine 费用,您可以更新以下 Cloud 配额,以限制项目每天可消耗的 EECU 时间量:

Earth Engine compute time (EECU-time) per day in seconds:项目级配额,用于限制项目中所有用户的总 EECU 时间。

如需详细了解您可以设置的 Earth Engine 资源配额,请参阅 Earth Engine 资源配额。

设置每日上限

您可以在 Google Cloud 控制台的配额和系统限制页面中查看和修改配额。调整配额后,更改会在几分钟内生效。如需设置或更新每日限额,请执行以下操作:

- 验证您在所选项目中是否拥有更改项目配额的权限。

- 前往 Google Cloud 控制台的配额页面。

- 使用过滤条件搜索框中的指标过滤条件来过滤

earthengine.googleapis.com/daily_eecu_usage_time。如果您没有看到Earth Engine compute time (EECU-time) per day in seconds配额,请确认您已为所选项目启用 Earth Engine API。 - 点击三点状菜单中的修改配额。

- 如果无限制复选框处于选中状态,请取消选中。

- 在新值字段中,输入所需的 EECU 秒数限制。点击提交请求。

如需详细了解如何查看和管理配额,请参阅查看和管理配额。

返回的错误消息

设置每日限额后,如果您超出该限额,Earth Engine 会返回以下错误消息:

您的用量超出了“earthengine.googleapis.com/daily_eecu_usage_time”的自定义配额,您的管理员可以在 Google Cloud 控制台中调整此配额:https://console.cloud.google.com/quotas/?project=_.

一旦超出配额,Earth Engine 请求将失败,直到第二天配额重置或管理员提高限额。

精细的监控和提醒

如果您需要比每日限额更精细地控制和监控费用,以下方法需要进行更多设置,但可以在workload_tag和批处理任务级别启用提醒和取消功能。

这些配方使用针对正在运行的请求显示的 EECU 时间监控功能。如需详细了解 Cloud Monitoring 中正在进行的 EECU 时间报告,请参阅监控使用情况指南。

配置提醒

您可以在 Cloud Monitoring 中配置提醒,以便在指标达到特定阈值时收到警告。Cloud Monitoring 提醒系统非常灵活。我们在此收集了一些自己喜欢的食谱,但您也可以根据自己的需求,随意搭配食材进行烹饪。

配方:workload_tag 使用情况的聊天通知

此示例展示了如何设置聊天通知(例如 Google Chat 消息或 Slack 消息),以便在给定 workload_tag 的 Earth Engine 计算使用量超过阈值时收到通知。如果您有一组为生产服务创建数据的导出任务,并且希望在这些任务总共消耗的 EECU 时间超过一定量时收到通知,那么此功能会非常有用。

- 访问 Cloud 控制台的 Cloud Monitoring 部分中的提醒页面。

- 选择“创建政策”以配置新的提醒政策。

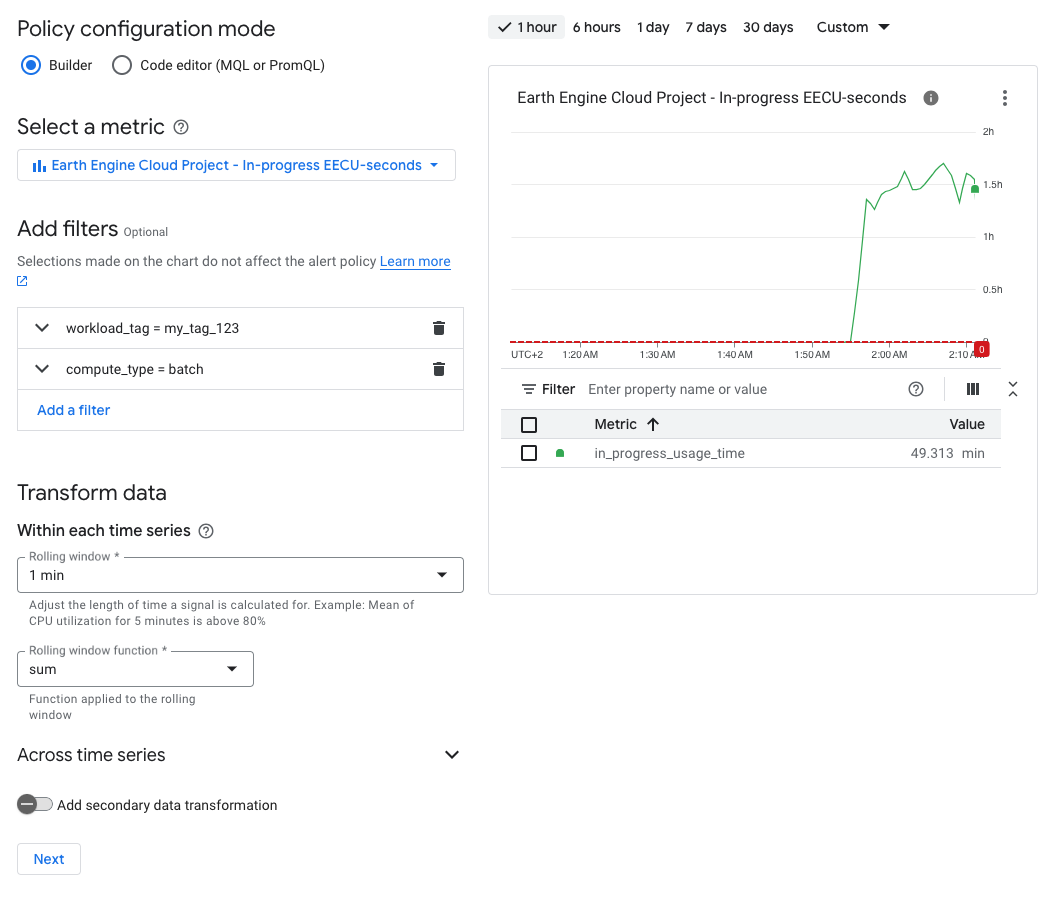

- 选择指标:

- 正在进行的 EECU 秒数表示待处理(尚未成功)的计算秒数。

- 您可能需要取消选择“有效”过滤条件才能看到该指标。

- 添加过滤条件:

- 使用

workload_tag == your_workload_tag_value可过滤到特定工作负载标记。 - 使用

compute_type = batch或compute_type = online过滤到特定类型的计算。

- 使用

- 选择合适的“滚动窗口”值。如果您不确定,请使用

5 min。 - 从“滚动窗口函数”菜单中选择“求和”。

- 选择提醒触发条件并为其命名。

- 选择通知渠道。

- 在此配方中,我们将从模态窗口中选择“管理通知渠道”,然后选择“添加新渠道”以粘贴 Google Chat 的聊天室 ID。在查看聊天内容时,您可以在 Gmail 或 Chat 页面的网址中找到此 ID。

- 如果您使用的是 Google Chat,还需要输入

@Google Cloud Monitoring并选择相应应用,将“提醒”应用添加到您的群组中(如果您的组织允许)。

- 选择相关的政策和严重程度标签。

- 撰写简短的文档代码段。

- 发布新的提醒政策!

设置完成后,只要项目的支出超过阈值,您就会在聊天室中收到提醒。

配方:接收有关 EECU 总进行时间的电子邮件提醒

按照聊天通知的配方操作,但需进行两项更改:

- 跳过添加

workload_tag过滤条件的步骤,以便查看所有值。 - 选择通知渠道时,请添加您的电子邮件地址,而不是配置聊天渠道。

提醒延迟时间和时间安排

请注意,监控报告的传播会略有延迟,因此您不应期望立即收到通知。

取消占用大量资源的任务

如果给定了限制,则可以使用 Earth Engine API 定期检查待处理任务的列表,并针对任何超出 EECU 秒数限制的正在运行的任务请求取消。

操作指南:在笔记本或本地 Python shell 中运行代码段

eecu_seconds_limit = 50 * 60 * 60 # 50 hours

print("Watching for operations to cancel...")

while(True):

for op in ee.data.listOperations():

if op['metadata']['state'] == 'RUNNING':

if op['metadata'].get('batchEecuUsageSeconds', 0) > eecu_seconds_limit:

print(f"Cancelling operation {op['name']}")

ee.data.cancelOperation(op['name'])

time.sleep(10) # 10 seconds